Archiving Event Data

The deltaDNA platform supports the automatic archiving of events data to Amazon Web Services (AWS) using Amazon S3 Buckets.

Once your S3 Bucket has been configured within the platform CSV files will be automatically delivered containing the event data for your game.

Bucket Configuration

Follow the AWS guide on creating a bucket to create the bucket that will be used to store your exported events data.

After creating your bucket, you will need to configure the permissions to allow deltaDNA access to export to your bucket. This is achieved by accessing your bucket then browsing to Permissions > Bucket Policy and then entering the following JSON replacing <BUCKET> with the name of your bucket:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

{ "Version": "2012-10-17", "Statement": [ { "Sid": "deltaDNA-exporter", "Effect": "Allow", "Principal": { "AWS": "arn:aws:iam::108146853768:user/exporter" }, "Action": ["s3:ListBucket","s3:PutObject"], "Resource": [ "arn:aws:s3:::<BUCKET>", "arn:aws:s3:::<BUCKET>/*" ] } ] } |

![]() Please enable Encryption on your bucket and set it to AES-256 and ensure that the permissions are entered correctly otherwise the export process will not be successful.

Please enable Encryption on your bucket and set it to AES-256 and ensure that the permissions are entered correctly otherwise the export process will not be successful.

Next, you must configure your deltaDNA game to start exporting to this bucket. Browse to the Game Details page for your game by accessing the Game Management page and clicking the ‘Edit game details button next to you game, as detailed below.

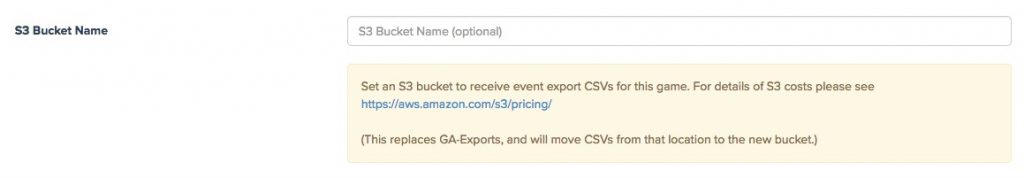

Finally, enter your bucket name in the S3 Bucket Name field, ensuring that it is entered correctly in order for the export process to be successful.

After you have completed the bucket configuration steps, your historical data will be moved to the new bucket and any new export data will be stored in the bucket specified.

Within your bucket, there will be a folder for each game and sub folders for their DEV and LIVE environments. So you can use the same bucket for all the games on your account.

|

1 2 3 4 |

- <Bucket Name> -- Events_<DDNA_ApplicationID> --- DEV --- LIVE |

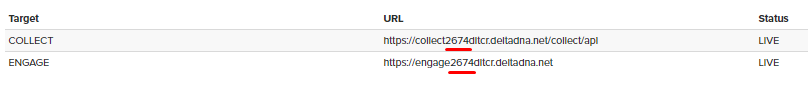

You can find the ApplicationID for each of your games on the game details page.

Files within your folders follow a naming convention EVENTS_<ApplicationID>_<FileID>.csv.gz where the FileID is numeric and incremental, but not sequential. Files will usually be written at roughly hourly intervals, but this can vary depending on a number of factors. The size of each individual file is capped, multiple files will be created if the volume of events since the last archive run exceeds a certain limit.

Please note: The purpose of the S3 event archive is to provide you with your own copy of the data in case you ever need to load it in to another system. It is completely separate from the data storage that deltaDNA use to drive all your analysis and reporting or restore expired events back in to your events table.

There are a few things you should take into consideration with regards to exporting events to Amazon S3:

- Historical data will be moved to the new bucket within roughly 24 hours – if the bucket is deleted after this takes place then the data will be lost

- We require that AES-256 server-side encryption is enabled on the bucket you are configuring to accept event data – more information can be found at the Amazon S3 documentation site

- Any data going forward will be exported straight to the bucket provided – again, if the bucket is deleted then this data will be lost

- Once the bucket is set up and receiving exports correctly do not adjust permissions or settings as this will cause future exports to fail

- Using AWS and S3 storage will incur a commercial charge – for more information regarding pricing visit the AWS pricing guide – note that the number of PUT requests made will likely match the number of export files stored in your existing S3 archive over a certain time period

- In support of our efforts to be GDPR compliance, from 25th May 2018. Only customer-owned storage of archive files will be supported. All customers will have to have their own S3 bucket configured within the platform for deltaDNA to send customer archive files to. Historic customer archive files and export folders on the deltaDNA S3 account have been deleted.